Analytics engineering workflows with dbt and Synq

What’s dbt (and why it’s awesome)

Unless you’ve been living under a rock you’ve likely heard of dbt. dbt helps data teams work like software engineers - from development to testing and documentation, and deployment. More than 20,000 companies use dbt and equally impressive is the community dbt has built with over 50,000 members.

We’re big users of dbt ourselves and have seen first-hand how the tool can transform both data and teams. When we speak with data teams we frequently hear different variants of this

dbt has completely changed the way our entire team works

Before using dbt we were blind to changes introducing issues downstream

I left my current company because they wouldn’t let us use dbt

We like dbt so much we wrote a guide to building reliable data with dbt tests.

In the following sections we go through four core workflows in dbt to build reliable data at scale.

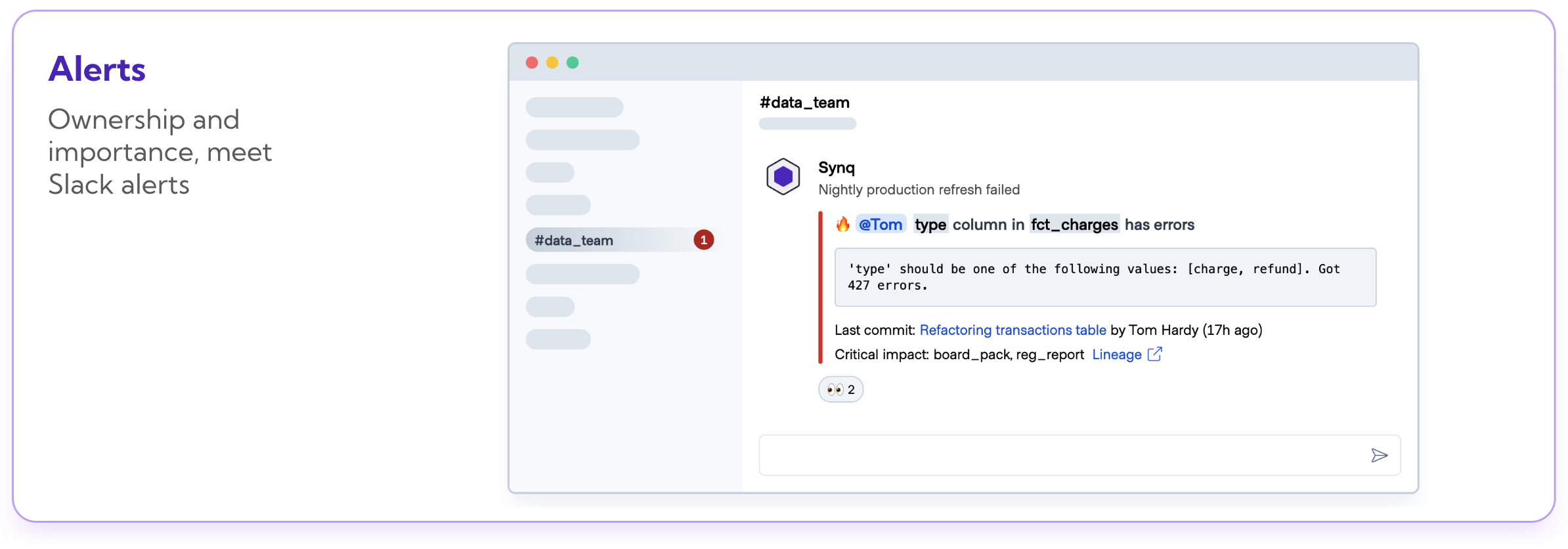

Making alerts actionable with ownership and importance

Slack alerts from a failing dbt test is undeniably a better way to learn about a data issue than the VP of marketing spotting an issue in a dashboard and tagging the whole data team.

Two things are often missing in this workflow. It’s unclear who should look at a test failure and there’s no clear definition of importance. This means that critical issues are not acted on fast enough.

Define ownership and importance in dbt to address this.

Define ownership

Define ownership using the owner meta tag for your most important data models or tests. Do it at a team rather than an individual level to make it clear for everyone who’s expected to act on issues.

Define importance

Whether you have a few test failures per week or dozens per day, it’s important to be explicit about when an issue is important. This helps you prioritise and know when you should drop everything to fix it.

As a rule of thumb, data models that are critical either have a business-critical use case (e.g. used for board reporting) or have a lot of downstream dependencies (e.g. core users table).

Start by using the dbt meta configurations and create a criticality: high tag for your most important data models or tests.

When you’ve defined ownership and importance, whenever you have a test failure you can open dbt docs and inspect the metadata details which makes expectations for who should act and with what urgency clear.

With Synq

Synq automatically displays owner and importance (🔥) in the alert so it’s clear who should act and with what urgency directly from the alert. You can use the owner definition to route alerts to the right team if you’ve got a busy alerting channel.

Analyse your metadata

dbt generates artifacts such as manifest.json and run_results.json that contains metadata about your runs and project’s resources. This metadata can help you answer questions like

- What’s the uptime of my most important data models

- Is my largest data model running slower over time

- Is the number of rows that’s failing a test getting worse over time

Model uptime

What percentage of time over the last 30 days has your data been stale or had issues? Understanding the uptime by model can help you answer this and you can then reason about if that’s a level you’re happy with.

Test uptime

Long failing data tests are akin to the broken windows theory. If you stop ignoring a few failing tests before you know it, your team will get accustomed to the idea that tests are not that important, and you’ll start seeing the data reliability deteriorate.

Model runtime

Whether to save money or make sure that your pipeline is not getting gradually slower so stakeholders don’t have fresh data, regularly monitoring model runtime is a good idea. This deep dive from the dbt team has some excellent steps on how you can debunk runtime within dbt.

In the Observability w/ dbt talk, Kevin Chan and Jonathan Talmi walk through how you can use dbt metadata to create a dashboard to analyse runtime over time and extract metadata from the dbt artifacts.

With Synq

Automatically see analytics for each assets - from tests to models and jobs and monitor runtime, volume, uptime and freshness

Map downstream dependencies with exposures

Exposures make it possible to define and describe downstream use of your dbt project, such as dashboards, reverse ETL tools or machine learning models. You can share these with your stakeholders in dbt docs. Specify relevant data such as the link to a dashboard, owner and criticality to make it explicit how issues impacting a downstream dependency should be acted on.

You can also use the Dashboard Status Tile in combination with exposures to display if there have been any upstream test or freshness errors directly in a Looker, Tableau, Mode or Sigma dashboard.

With Synq

Automatically infer lineage from all your BI assets and dbt so you don’t have to manually maintain exposures. Synq can also be configured to alert downstream users in Slack if there are issues upstream of their dashboards.

Testing workflows for scaling teams

dbt is no longer only being used by startups. Large companies such as Nasdaq use it to build business critical data. But using dbt for larger data teams (>30) creates a unique set of challenges

- You’ll start experiencing alert fatigue in Slack as issues happen all the time

- Too many failing tests and making it hard to know which ones to pay attention to

- An explosion of assets and dependencies across the data modelling and BI layer make the lineage impossible to use

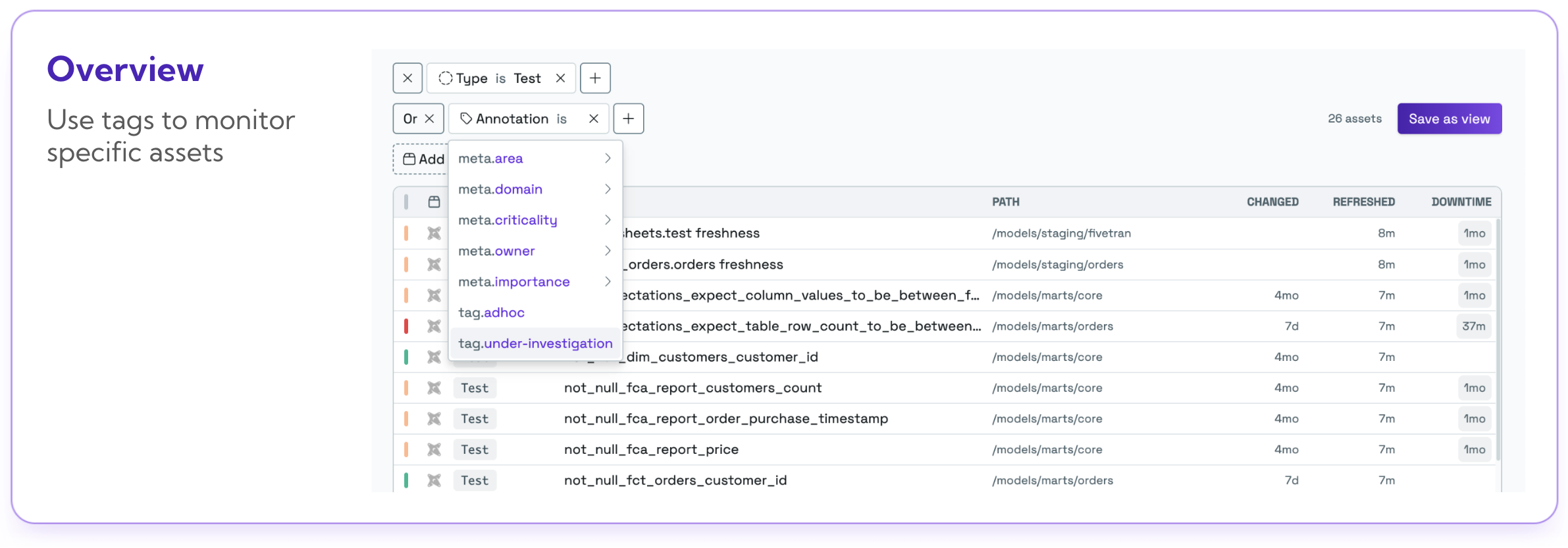

Tag issues that are under investigation

If you’ve known issues and are waiting for upstream teams to fix the underlying problem, you can mark these tests as “under-investigation” to avoid being notified of issues you’re already aware of over and over again.

models:

- name: stg_customers

description: All customers

columns:

- name: customer_id

description: Unique identifier for customer

tests:

- unique

tags:

- under-investigationYou can then run these tests in a separate job so you’re not blind to them and can monitor if they get worse over time, but also don’t get distracted by them in your production run.

dbt test --exclude tag:under-investigationWith Synq

Segment all your data assets by tag, type or owner to monitor the parts of your stack that matters to you

Monitor long failing tests

Long failing tests are akin to the broken window theory. Start ignoring one for too long and you’ll have dozens of failing tests nobody cares about. The best solution for this is to monitor long failing tests and dedicate time to fixing them.

In dbt you can search the logs for a failing test to go through the most recent handful of runs and understand if a test has just started failing or if it’s a systematic issue that needs fixing at the root cause.

With Synq

Track all your tests in one place. Sort by downtime and filter by domain or owner to make the right teams accountable.

Deteriorating test failure results

You may have a test failure on an important data model, but you know it’s safe to ignore as it’s just 2 unimportant mismatched employee IDs. But what happens if the test starts to deteriorate and you start seeing hundreds of failing rows? You’d risk missing that until a stakeholder notifies you that something looks off.

dbt console logs show you how many rows are failing a test.

Use dbt debugging logs to play close attention to failing tests. While you may tolerate a few duplicate accounts in your revenue data, you’ll want to know about it before stakeholders if that becomes thousands overnight.

With Synq

Analyse the magnitude of failing over time in one unified interface over time

Conclusion

In this post we’ve explored how to extend your dbt workflows to build reliable data at scale

- Making alerts actionable with ownership and importance

- Analyse your metadata

- Map downstream dependencies with exposures

- Implement testing workflows for scaling teams

For more details, we recommend diving into the dbt docs. Happy modelling.

.png)

.png)