Launching our Automated Testing & Monitor Advisor

In May, we launched Scout, our always-on data SRE. You can think of Scout as a seasoned data engineer, with all the context of historical issues, a photographic memory of your 2,000-model lineage, and the ability to traverse code, business logic, and git commits in seconds. Since then, Scout has been used to debug and understand the root cause of hundreds of data issues with an 80% acceptance rate on recommended root causes.

Today, we’re extending Scout’s capabilities to automatically suggest relevant tests and SYNQ monitors that fit your business context.

The problem: Alert fatigue and disjointed tests

Data teams are no stranger to testing, but testing well is hard. You need to anticipate everything that could go wrong with the data and express that into code. This requires both a deep understanding of upstream systems, data transformations, and downstream use cases of the data.

Even the best of data teams struggle to get this right. As a consequence, they end up on the back foot of stakeholders being the ones to spot data issues, while at the same time drowning in a never-ending inflow of data alerts.

When we analyzed dozens of workspaces, we saw that 97% of all tests deployed in dbt were either not null, unique, or accepted values, hardly representing the real-world challenges and complexities of the underlying data.

As teams and systems scale this only gets harder. It’s impossible for one person to have all the context and you end up locally optimizing for the part of the data value chain you understand without the bigger picture in mind.

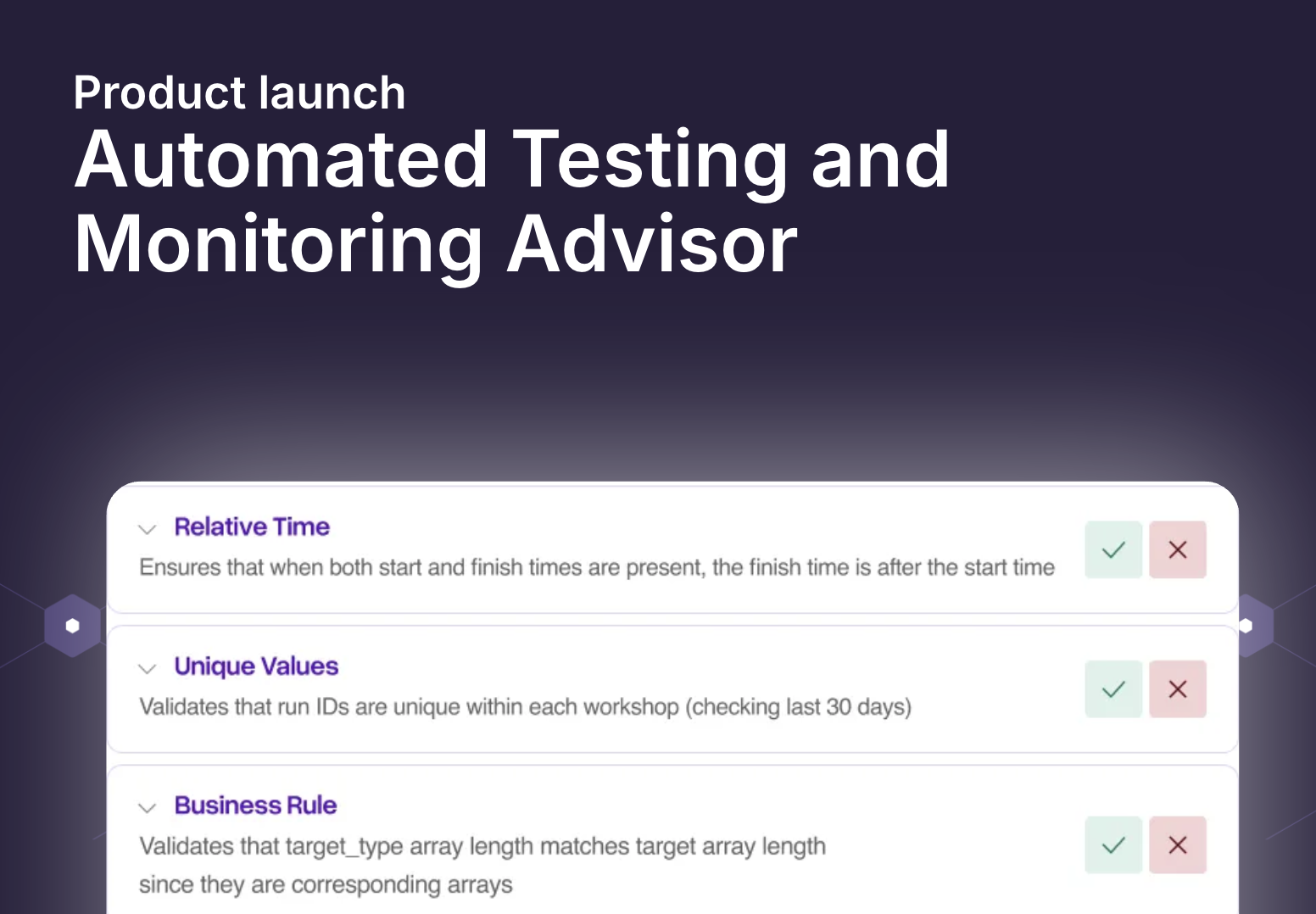

Meet our automated monitoring and testing advisor

We’re excited to launch Scout Testing and Monitoring Advisor to give data teams superpowers to solve these testing challenges. It’s designed to help you build a best-in-class testing strategy, no matter if you’re just getting started with testing or if you already have thousands of tests.

We’ve used the blueprint inspired by the workflow we’ve seen the best teams take (we’ve written an entire guide about it!). Here’s how it works:

- Lead with business context - add context about your business, such as when data should be ready by and how it’s used

- Define your testing strategy - give context about your testing strategy or use our out-of-the-box recommendations

- Define your critical data products - tell SYNQ what data matters most

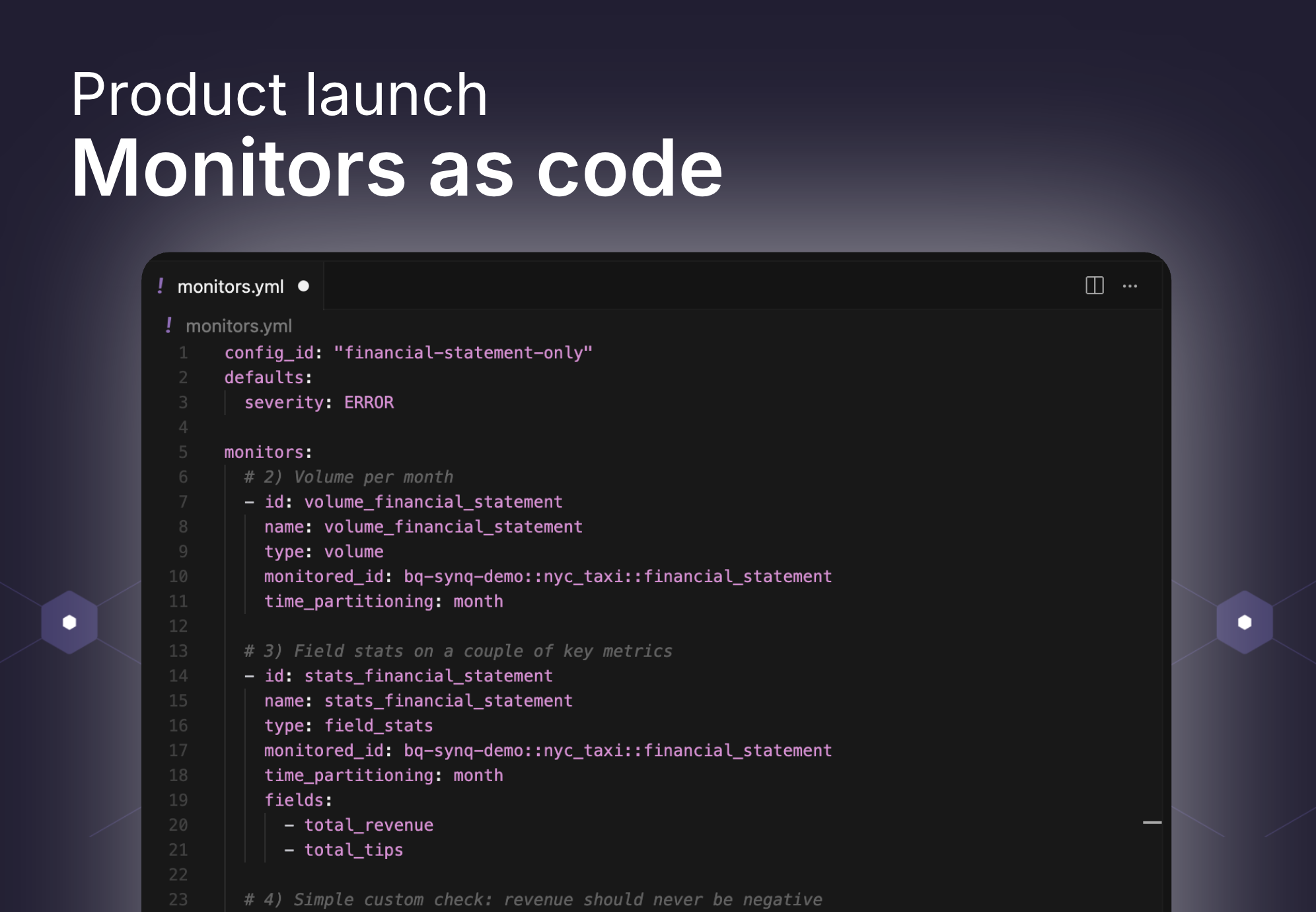

The agent is optimised for analytics engineering workflows and tests and monitors can be directly deployed to dbt, SQLMesh, and SYNQ to work with the tools you already use.

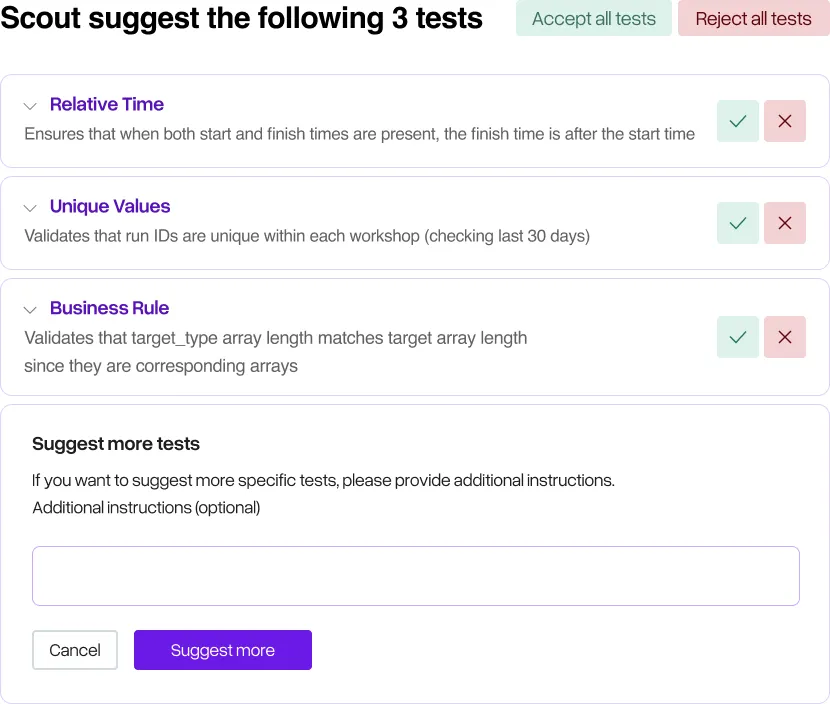

Today: optimized testing and monitoring for tables

You can use the testing and monitoring advisor in the SYNQ platform today. When you navigate to a table, Scout will give you a set of suggested monitors and tests. Instead of adding the most basic not_null and unique tests, we analyse each column, where it goes, where the data is coming from, and what the data product or use case is at the end of this.

You can also provide specific context about your business or data use case in a prompt, and the tests suggested will reflect that.

You’re still fully in control and we’ll only push new code changes with your permission.

Next up: robust testing for pipelines and data products

We’re already beta testing the next extension of Scout’s testing and monitoring capabilities: The ability to deploy entire testing blueprints to important pipelines and data products.

For example, for a machine learning pipeline, you may have Scout suggest end-to-end tests based on this input:

“Analyse my ML pipeline upstream from the cltv_predictions table and deploy any relevant tests. The data is updated weekly at 8 PM. Here’s a list of my most highly predictive features and examples of previously uncaught issues, …”

For a regulatory report for a bank, it may look like this:

“We provide a regulatory report based on the table reg_ifrs9_monthly monthly on the first day of the week. It’s key we understand if there are any deviations, and we care about even smaller deviations on our most important columns: fraud_rates, complaints, and capital_ratio.”

We’ll combine this with a library of opinionated best practices learned from engaging with thousands of data teams, for example, by avoiding redundant tests in the lineage, and adjusting low signal-to-noise tests on an ongoing basis.

Outcome: A robust testing strategy

Our ambition with Scout is to get you in the top 5% of data teams when it comes to building reliable data. Here are the outcomes you should expect to see from day one.

- Maximise the chance to catch issues

- Minimise alert fatigue

- Save time

Context is king, and Scout’s test and monitor suggestions are only possible because SYNQ captures everything: lineage, logs, usage, issues, incidents, and their outcomes. That history gives Scout a foundation that most humans or tools don’t have.

The best thing about Scout is that the more you use it, the better it gets. As you declare incidents, give feedback to monitors, or remove noise tests, we automatically capture this to continuously improve your testing strategy. We give your team clear feedback on why our system suggests specific tests or monitors, so they can upskill as part of using Scout.

We’re excited to see what you’ll do with Scout!

Get started today

Here are a few ways to learn more about Scout’s testing and monitoring capabilities.

- Watch the launch webinar

- Book a demo

- Try SYNQ Free

- Learn more at synq.io