How to Implement Data Observability? A Step - by - Step Guide

.png)

Data has become central to how businesses operate, and keeping it reliable is now a must. Data observability gives teams the insight and tools to spot issues quickly, understand their impact, and keep systems running smoothly. Modern data stacks often involve hundreds of pipelines and models, powering everything from internal dashboards to customer-facing applications. With data now driving key business decisions and products, teams are borrowing principles from software engineering (such as monitoring, testing, and Service Level Agreements (SLAs)) to manage data quality at scale. In other words, if you wouldn’t release software without monitoring, you shouldn’t ship a data product without observability.

What Is Data Observability and Why Does It Matter?

Achieving observability means being able to quickly answer key questions when something goes wrong: What’s broken? Who is impacted? Where did it originate? How do we fix it?. By implementing robust data observability practices, data & analytics engineers can drastically reduce “data downtime” (periods when bad data or pipeline failures disrupt operations) and build greater trust in the insights delivered to stakeholders.

Data observability refers to your ability to monitor, detect, and understand the health of data as it moves through your systems. Its goal is to ensure that data remains accurate, consistent, timely, and reliable so that potential issues (like broken pipelines or data quality lapses) can be identified and resolved before they cause bigger problems. Simply put, observability gives you end-to-end visibility into your data’s condition, much like application monitoring does for software.

Importantly, data observability is comprehensive. It provides a complete view of the health of your data by combining multiple signals such as freshness timestamps, row counts, schema changes, and lineage information, rather than focusing on any single metric. By monitoring these dimensions together and automating checks and alerts, teams can quickly detect anomalies, diagnose pipeline failures, and resolve issues before they impact downstream users. In the following sections, we’ll explore best practices for putting this approach into practice.

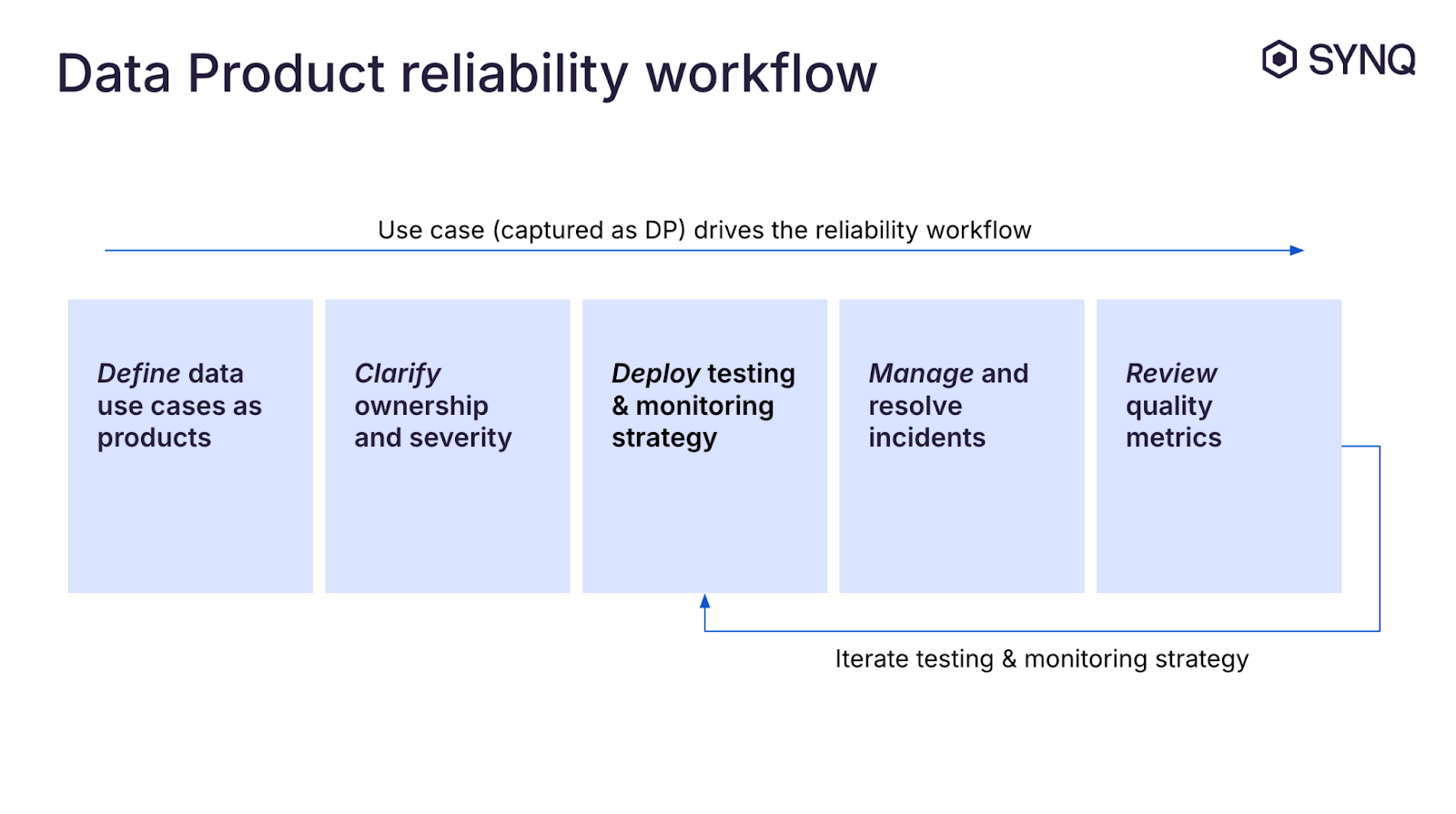

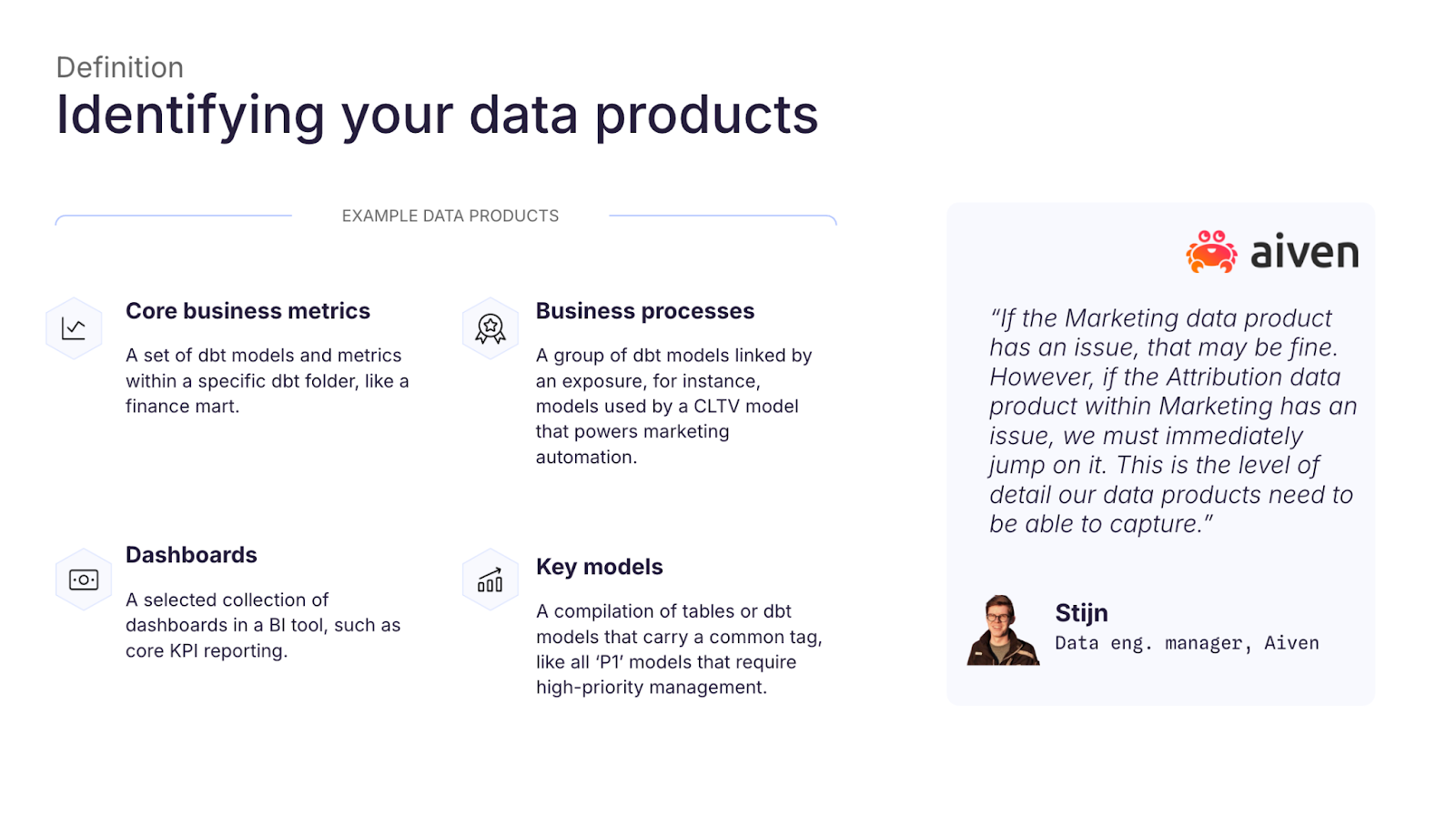

Treat Data as Products and Prioritize What to Observe

A foundational step is to identify what data assets are the most important for your organization and treat them as “data products.” At its core, a data product is any output of the data team that delivers value to a consumer (whether a person or an application). This could be a BI dashboard, a machine learning model, an analytics dataset, or any data deliverable with a specific use and audience. What makes it a product is the intentionality behind it: it has clear ownership, defined quality expectations, and known consumers – much like a software product that is built to be reliable and maintainable.

Not every table or data pipeline in your warehouse needs the same level of monitoring. Focus on your most critical data products first. These are the dashboards or data feeds that would cause significant pain if they broke (for example, revenue reporting, customer-facing metrics, or key ML model outputs). It’s helpful to classify data products by priority tiers (e.g., P1, P2, P3) to denote their criticality. Higher-tier (P1) products have a bigger business impact and thus warrant stricter observability (more thorough testing, real-time alerts, tighter SLAs), whereas lower-tier (P3) products might be monitored with looser parameters.

For instance, a P1 data product might be a mission-critical revenue dashboard or an anomaly detection model used in production. It requires near 24/7 freshness and immediate alerting on failures. P2 might include important but not mission-critical reports (e.g. an executive KPI dashboard updated daily). P3 could be a low-stakes internal report or exploratory dataset used infrequently. The table below illustrates these tiers and examples:

Data Product Tier: Description and Examples

P1 (Critical)

Business-critical data products that demand the highest reliability. Examples: live revenue dashboards, billing metrics, or real-time fraud detection models. These systems require 99%+ uptime, rapid refreshes, and instant alerts on any data quality issue.

P2 (High)

Important data products with moderate impact. Examples: executive dashboards or operational reports that are used regularly but not in real-time customer-facing scenarios. They warrant solid monitoring and timely alerts, but occasional delays or minor issues are not catastrophic.

P3 (Low)

Lower-criticality data outputs such as weekly reports or ad-hoc analyses. Examples: a social media analytics report or an internal experiment results table. Basic monitoring is applied (e.g. daily checks), and these can tolerate longer issue resolution times.

Establish Clear Ownership and SLAs for Data Quality

Treating data as a product goes hand-in-hand with assigning ownership. Every important data product should have a clearly identified owning team (or individual, if your team is small) responsible for its maintenance and quality. Ownership in practice means that team is accountable for responding to data incidents, notifying stakeholders of issues, and implementing fixes and preventive measures. For example, a “Marketing Analytics” team might own all marketing-related data products; if a dashboard breaks or data is delayed, that team is on point to address it and communicate impact.

Along with ownership, define Service Level Agreements (SLAs) for your high-priority data products. An SLA is essentially a reliability promise to the data consumers – it sets expectations on data availability, freshness, and quality. For a P1 data product, this might mean ensuring 99%+ uptime or freshness (e.g., data is updated by a certain hour) and a fast response time to any issues, whereas a P3 product could have a more relaxed target (say 95% freshness with slower incident response).

To know if you’re meeting these SLAs, track your data quality KPIs. In practice, teams monitor the coverage of data quality checks (what percentage of the necessary tests/monitors are in place) and the health of the data (what percentage of those checks are currently passing). These two metrics together indicate whether a data product is within its reliability targets. It also helps to consider different dimensions of data quality – e.g. accuracy, completeness, consistency, uniqueness, timeliness, validity – and ensure your monitoring covers the ones that matter for each product.

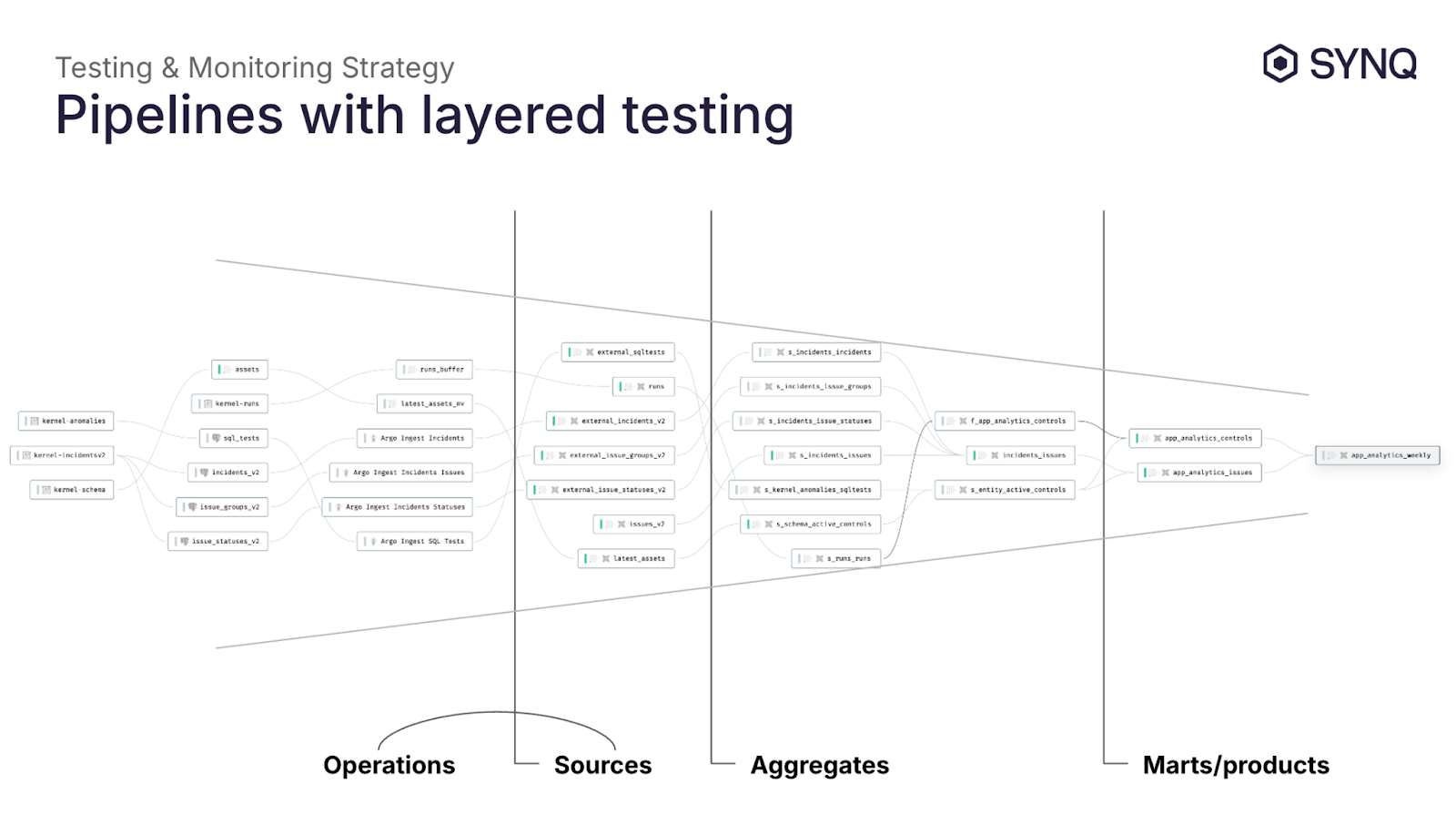

Implement a Layered Testing Strategy in Your Pipelines

One of the best practices in data observability is proactive testing of your data at various stages of the pipeline. Rather than waiting for end-users to notice something is wrong, you embed data quality tests as early and often as makes sense, but in a targeted way. A common approach is to use layered testing aligned with your data architecture :

- Source layer tests: Apply checks at raw data ingestion points to catch issues early (e.g., schema and basic validity checks on source tables). Most tests belong here, since catching errors at the source prevents bad data from propagating.

- Staging layer tests: Add logic-aware tests during transformations to verify they work as intended (for example, ensure a derived field or metric falls within expected ranges).

- Data mart/output tests: At the final output layer, include tests for business rules and critical metrics (e.g., no negative revenues in a finance dashboard).

Use tools like dbt to automate these tests as part of your pipeline. Avoid redundant testing: a naive “test every model” approach creates noise and overlaps, leading to false confidence. If an upstream test catches an issue, you don’t need to repeat it downstream. Focus on meaningful tests at key points rather than blanket coverage.

Deploy Automated Monitoring and Anomaly Detection

No matter how thorough your tests are, there will always be unexpected issues (the “unknown unknowns”). This is where data monitoring comes into play as a complement to your tests. Data observability platforms provide anomaly detection capabilities that continuously watch your data for unusual patterns or breaks without you manually writing a test for each one.

Even with good tests, you’ll need anomaly monitors to catch unexpected issues. Key things to watch are freshness (is the data updated on schedule?), volume (are record counts within normal ranges), distribution of values (are metrics within expected bounds), and schema (any unexpected changes in structure). These anomaly detectors will flag late data, missing or duplicate loads, out-of-range values, or broken schemas – issues you might not have anticipated with explicit tests. By learning your data’s normal behavior (often using machine learning), they can catch problems that weren’t explicitly tested or expected. For example, SYNQ’s platform includes built-in monitors for data freshness, volume, and outliers so that many anomalies are detected automatically.

When deploying monitors, it’s important to tune them to balance sensitivity vs. noise. As noted earlier, monitoring every single table with every possible check can lead to a flood of alerts that aren’t all actionable. Start with broad coverage on critical tables and pipelines (your P1s), then refine over time. Use your data product definitions and lineage to focus monitoring on the areas where an anomaly truly matters. (For instance, it’s often more useful to monitor the end-to-end pipeline health of a critical dashboard and its key upstream dependencies than to apply dozens of generic monitors on low-level tables that feed less important outputs.)

Alerting, Incident Response, and Issue Resolution

Observability isn’t just about detecting problems. It also requires a plan for alerting and incident response. When a data quality issue is detected, the right people need to know about it immediately. A best practice is to funnel all data alerts into a single channel (for example, a dedicated Slack channel) so nothing slips through the cracks. Each alert should include context to aid troubleshooting (for instance, data lineage information to pinpoint what upstream source or downstream report is affected ).

Since you’ve defined owners for each data product, tie your alerting into those ownership assignments. In other words, make sure the team responsible for a given data product automatically receives the alerts for any issues in that domain. This way, accountability is clear and response can be quick. For especially critical incidents (say a P1 issue breaching an SLA), have an escalation policy (similar to how engineering teams handle high-severity outages) to ensure a rapid response.

Finally, establish an incident review process. Treat data incidents as learning opportunities to improve your observability. After resolving an issue, analyze what went wrong and update your tests or monitors to prevent similar problems in the future. Over time, this continuous improvement will make your data pipelines more resilient.

Is Data Observability Difficult to Get Started With?

At first glance, data observability can feel daunting. Many teams assume it requires a heavy investment in tools, extensive instrumentation, and a full re-architecture of existing pipelines. In reality, most successful implementations start small and evolve gradually.

Common Perception vs. Reality

- Perception: Observability means monitoring every table, metric, and pipeline in the warehouse.

- Reality: Effective observability begins with a handful of critical data products (P1 assets) and expands coverage incrementally. You don’t need to boil the ocean to see results.

- Perception: Specialized skills are required to implement observability.

- Reality: Most modern observability tools integrate with frameworks data teams already use (like dbt or SQL-based transformations). This lowers the barrier to entry and lets teams adopt observability without learning entirely new workflows.

Why It’s Easier Than It Seems

- Leverage existing metadata: Many pipelines already collect lineage, freshness, and schema information. Observability tools can surface and enrich this metadata rather than requiring new manual instrumentation.

- Layered rollout: Start by adding basic freshness and volume monitors, then introduce anomaly detection and SLA tracking as maturity grows.

- Automated recommendations: Emerging solutions, including SYNQ Scout, can suggest tests or monitors based on historical incidents, further reducing the manual setup effort.

What Matters Most at the Start

The hardest part isn’t setting up monitors or writing tests, it’s deciding where to focus. Defining your critical data products and agreeing on ownership and SLAs creates the foundation for a manageable observability rollout. From there, tooling and automation can scale coverage quickly.

Conclusion

Implementing data observability is essential for ensuring trustworthy, reliable data. By treating data assets as products, complete with owners, SLAs, tests, and monitoring, you create a resilient data ecosystem that can grow with the business.

Remember that data pipelines will break or produce bad data at some point, but with strong observability in place, you won’t be caught off guard. Instead, you’ll catch problems early, understand their impact, and fix them before they erode stakeholder trust. In the end, data observability isn’t just about preventing disasters; it’s about enabling confidence in your data so that your organization can make decisions faster and with peace of mind.

You can find out more in our comprehensive guide to data observability.

Frequently Asked Questions About Implementing Data Observability

What are the key steps to implement data observability?

The main steps include treating data as products, establishing ownership and SLAs, prioritizing critical assets, layering different types of data tests, and scaling monitoring over time. Starting small and focusing on the most important data products helps ensure adoption.

Why is data observability important for data teams?

Data observability ensures that teams can trust the data powering business decisions and customer-facing products. It reduces data downtime, speeds up root cause analysis, and helps teams detect and fix issues before they impact stakeholders.

What tools can help implement data observability?

Many teams begin with SQL checks, dbt tests, or pipeline alerts. As complexity grows, specialized data observability platforms provide end-to-end monitoring, lineage, root-cause-analysis and incident management workflows.

How does data observability relate to data quality?

Data observability is the practice that makes data quality measurable and manageable. While data quality defines standards like accuracy and completeness, observability ensures continuous monitoring so those standards are maintained.

When should a company adopt a data observability tool?

When to invest in data observability depends on your company. If your data team is managing multiple pipelines, supporting critical analytics, or fielding frequent “is this data correct?” questions, it’s likely time to adopt observability. Most teams benefit from introducing it once homegrown checks are no longer sufficient.

.jpeg)

.png)

.png)